HPC Introduction: Difference between revisions

Chris Huxley (talk | contribs) |

No edit summary |

||

| (16 intermediate revisions by 2 users not shown) | |||

| Line 1: | Line 1: | ||

=Introduction= |

=Introduction= |

||

As of TUFLOW build 2017-09-AA, TUFLOW offers HPC (Heavily Parallelised Compute) as an alternate 2D Shallow Water Equation (SWE) solver to TUFLOW Classic. Whereas TUFLOW Classic is limited to running a simulation on a single CPU core, HPC provides parallelisation of the TUFLOW model allowing modellers to run a single TUFLOW model across multiple CPU cores or GPU graphics cards (which utilise thousands of smaller CUDA* cores). Simulations using GPU |

As of TUFLOW build 2017-09-AA, TUFLOW offers HPC (Heavily Parallelised Compute) as an alternate 2D Shallow Water Equation (SWE) solver to TUFLOW Classic. Whereas TUFLOW Classic is limited to running a simulation on a single CPU core, HPC provides parallelisation of the TUFLOW model allowing modellers to run a single TUFLOW model across multiple CPU cores or GPU graphics cards (which utilise thousands of smaller CUDA* cores). Simulations using GPU hardware has shown to provide significantly quicker model run times for TUFLOW users.<br> |

||

In general most of the functionality and features of TUFLOW Classic are available in HPC, |

In general, most of the functionality and features of TUFLOW Classic are available in HPC. Additionally, HPC offers several advanced features not supported in Classic, including: |

||

* Quadtree and sub-grid sampling |

|||

* High resolution map output grids |

|||

* Groundwater infiltration and sub-surface flows |

|||

* Wu turbulence formulation |

|||

* TMR bridge inputs (2d_bg) |

|||

===Solution Scheme and Parallelisation=== |

===Solution Scheme, Cell Discretisation and Parallelisation=== |

||

TUFLOW HPC is an explicit |

TUFLOW HPC is an explicit solver for the full 2D Shallow Water Equations (SWE), including a sub-grid scale eddy viscosity model. The scheme is both volume and momentum conserving, is 2nd order in space and 4th order in time, with adaptive or fixed timestepping. It is unconditionally stable. TUFLOW HPC's computational approach differs from TUFLOW Classic, which is a 2nd order (space) implicit finite difference solver. Both TUFLOW HPC and Classic solve the 2D SWE on the same uniform Cartesian grid configuration. Computationally each 2D cell includes 9 sub-grid points.<br> |

||

[[File: HPC Cell Design.PNG |300px]]<br> |

[[File: HPC Cell Design.PNG |300px]]<br> |

||

| Line 23: | Line 28: | ||

* The only elevations written to the SMS .2dm mesh file (by default, binary output is interpolated/extrapolated to the cell corners). |

* The only elevations written to the SMS .2dm mesh file (by default, binary output is interpolated/extrapolated to the cell corners). |

||

Within the above sub-grid framework, using TUFLOW HPC time derivatives of cell averaged water depth, u-velocity and v-velocity are computed on a cell-by-cell basis and the model evolved using an explicit ODE solver. Calculation of the cell based derivatives are highly independent of each other making it possible to run this solution scheme across multiple processors or GPU cards. Parallelisation is done by breaking up the model into vertical ribbons. Each ribbon of the model is run on a different processor (or GPU card) with boundary information shared between processors at each timestep.<br> |

|||

[[File: Mesh_Ribbon_Splitting.png |360px]]<br> |

[[File: Mesh_Ribbon_Splitting.png |360px]]<br> |

||

| Line 30: | Line 35: | ||

The explicit finite volume solution scheme utilised in HPC is mass conserving by construction (0% mass error). This differs to TUFLOW Classic, which can continue to simulate a model with some volume error due to it being an implicit finite difference scheme. The stability of the explicit finite volume scheme used in TUFLOW HPC is linked to the timestep, flow velocities, water depth, and eddy viscosity. The maximum timestep that can be used while maintaining model stability changes as the model evolves. While it is possible to choose a fixed timestep ahead of time (similarly to TUFLOW Classic), shorter run times and guaranteed model stability from start to finish may be achieved through the use of adaptive timestepping where the solver continually modifies the timestep based on various stability criteria. This is explained in more detail in our <u>[[HPC_Adaptive_Timestepping | Adaptive Timestepping]]</u> page.<br> |

The explicit finite volume solution scheme utilised in HPC is mass conserving by construction (0% mass error). This differs to TUFLOW Classic, which can continue to simulate a model with some volume error due to it being an implicit finite difference scheme. The stability of the explicit finite volume scheme used in TUFLOW HPC is linked to the timestep, flow velocities, water depth, and eddy viscosity. The maximum timestep that can be used while maintaining model stability changes as the model evolves. While it is possible to choose a fixed timestep ahead of time (similarly to TUFLOW Classic), shorter run times and guaranteed model stability from start to finish may be achieved through the use of adaptive timestepping where the solver continually modifies the timestep based on various stability criteria. This is explained in more detail in our <u>[[HPC_Adaptive_Timestepping | Adaptive Timestepping]]</u> page.<br> |

||

===Cell Discretisation=== |

|||

HPC cell discretisation is the same as in TUFLOW Classic, where the v velocities are defined on the horizontal mid-points between cell centres and the u-velocities on the vertical mid-points. <br> |

|||

===Compatible Graphic Cards (GPU) === |

===Compatible Graphic Cards (GPU) === |

||

| Line 42: | Line 44: | ||

* The GPU model should be displayed in the graphics card information; |

* The GPU model should be displayed in the graphics card information; |

||

* Check to see if the graphics card is listed on the following website: <u>http://developer.nvidia.com/cuda-gpus</u> |

* Check to see if the graphics card is listed on the following website: <u>http://developer.nvidia.com/cuda-gpus</u> |

||

On the NVIDA website each CUDA enabled graphics card has a “Compute Capability” listed. For cards with a compute capability of 1.2 or less, only the single precision version of the GPU Module can be utilised. However, benchmarking has indicated that the double precision version is NOT required and that the TUFLOW_iSP exe should be used for all GPU simulations. Extensive GPU hardware benchmarking has been undertaken to assist users who are upgrading hardware for TUFLOW modelling. Over 50 different hardware options have been tested for their speed performance. The results are provided on the <u>[[Hardware_Benchmarking | Hardware Benchmarking]]</u> page. |

On the NVIDA website each CUDA enabled graphics card has a “Compute Capability” listed. For cards with a compute capability of 1.2 or less, only the single precision version of the GPU Module can be utilised. However, benchmarking has indicated that the double precision version is NOT required and that the TUFLOW_iSP exe should be used for all TUFLOW HPC GPU simulations. Extensive GPU hardware benchmarking has been undertaken to assist users who are upgrading hardware for TUFLOW modelling. Over 50 different hardware options have been tested for their speed performance. The results are provided on the <u>[[Hardware_Benchmarking | Hardware Benchmarking]]</u> page. |

||

===Benefits of HPC=== |

===Benefits of HPC=== |

||

So what does this mean for modellers? <br><br> |

|||

| ⚫ | By providing the ability to run models on Graphics Cards, we can achieve significantly shorter model run times, increasing our modelling capabilities to be able to run continuous hydraulic models, with higher cell resolution, across larger extents and more scenarios. Common TUFLOW HPC applications include: |

||

* Monte Carlo design assessments |

|||

* Rainfall ensemble design assessments |

|||

* High resolution 1D underground / 2D above ground integrated urban drainage |

|||

* High resolution floodplain lumped hydrology / hydraulic modelling (either fully 2D or including nested 1D open channels and pipes) |

|||

* Whole of catchment direct rainfall |

|||

* Flood forecast modelling |

|||

* Long-term water resource management modelling |

|||

The unconditional stability and higher order accuracy of TUFLOW HPC also lends itself well to highly transient situations, such as dam break assessments, where other solvers would either become unstable, lose accuracy or experience impractical simulation slow-down due to the need to solve at an extremely small timestep. |

|||

| ⚫ | |||

<br> |

<br> |

||

{{Tips Navigation |

|||

|uplink=[[ HPC_Modelling_Guidance | Back to HPC Modelling Guidance]] |

|||

}} |

|||

Latest revision as of 10:52, 29 January 2024

Introduction

As of TUFLOW build 2017-09-AA, TUFLOW offers HPC (Heavily Parallelised Compute) as an alternate 2D Shallow Water Equation (SWE) solver to TUFLOW Classic. Whereas TUFLOW Classic is limited to running a simulation on a single CPU core, HPC provides parallelisation of the TUFLOW model allowing modellers to run a single TUFLOW model across multiple CPU cores or GPU graphics cards (which utilise thousands of smaller CUDA* cores). Simulations using GPU hardware has shown to provide significantly quicker model run times for TUFLOW users.

In general, most of the functionality and features of TUFLOW Classic are available in HPC. Additionally, HPC offers several advanced features not supported in Classic, including:

- Quadtree and sub-grid sampling

- High resolution map output grids

- Groundwater infiltration and sub-surface flows

- Wu turbulence formulation

- TMR bridge inputs (2d_bg)

Solution Scheme, Cell Discretisation and Parallelisation

TUFLOW HPC is an explicit solver for the full 2D Shallow Water Equations (SWE), including a sub-grid scale eddy viscosity model. The scheme is both volume and momentum conserving, is 2nd order in space and 4th order in time, with adaptive or fixed timestepping. It is unconditionally stable. TUFLOW HPC's computational approach differs from TUFLOW Classic, which is a 2nd order (space) implicit finite difference solver. Both TUFLOW HPC and Classic solve the 2D SWE on the same uniform Cartesian grid configuration. Computationally each 2D cell includes 9 sub-grid points.

The ZC point:

- Defines the volume of active water (cell volume is based on a flat square cell that wets and dries at a height of ZC plus the Cell Wet/Dry Depth);

- Controls when a cell becomes wet and dry (note that cell sides can also wet and dry); and

- Determines the bed slope when testing for the upstream controlled flow regime.

The ZU and ZV points:

- Control how water is conveyed from one cell to another;

- Represent where the momentum equation terms are centred and where upstream controlled flow regimes are applied;

- Deactivate if the cell has dried (based on the ZC point) and cannot flow; and

- Wet and dry independently of the cell wetting or drying (see Cell Wet/Dry Depth). This allows for the modelling of “thin” obstructions such as fences and thin embankments relative to the cell size (eg. a concrete levee).

ZH points:

- Play no role hydraulically. This point location is used for output processing;

- The only elevations written to the SMS .2dm mesh file (by default, binary output is interpolated/extrapolated to the cell corners).

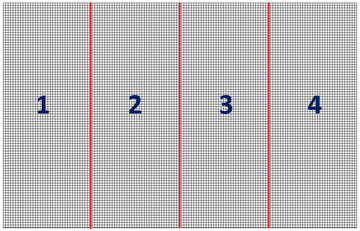

Within the above sub-grid framework, using TUFLOW HPC time derivatives of cell averaged water depth, u-velocity and v-velocity are computed on a cell-by-cell basis and the model evolved using an explicit ODE solver. Calculation of the cell based derivatives are highly independent of each other making it possible to run this solution scheme across multiple processors or GPU cards. Parallelisation is done by breaking up the model into vertical ribbons. Each ribbon of the model is run on a different processor (or GPU card) with boundary information shared between processors at each timestep.

Mass Conservation and Timestep

The explicit finite volume solution scheme utilised in HPC is mass conserving by construction (0% mass error). This differs to TUFLOW Classic, which can continue to simulate a model with some volume error due to it being an implicit finite difference scheme. The stability of the explicit finite volume scheme used in TUFLOW HPC is linked to the timestep, flow velocities, water depth, and eddy viscosity. The maximum timestep that can be used while maintaining model stability changes as the model evolves. While it is possible to choose a fixed timestep ahead of time (similarly to TUFLOW Classic), shorter run times and guaranteed model stability from start to finish may be achieved through the use of adaptive timestepping where the solver continually modifies the timestep based on various stability criteria. This is explained in more detail in our Adaptive Timestepping page.

Compatible Graphic Cards (GPU)

TUFLOW HPC’s GPU hardware module is only compatible with NVIDIA architecture CUDA enabled GPU cards. AMD GPU cards are NOT compatible. A list of CUDA enabled GPUs can be found on the following website: http://developer.nvidia.com/cuda-gpus . To check if your computer has an NVIDA GPU and if it is CUDA enabled:

- Right click on the Windows desktop;

- If you see “NVIDIA Control Panel” or “NVIDIA Display” in the pop up dialogue, the computer has an NVIDIA GPU;

- Click on “NVIDIA Control Panel” or “NVIDIA Display” in the pop up dialogue;

- The GPU model should be displayed in the graphics card information;

- Check to see if the graphics card is listed on the following website: http://developer.nvidia.com/cuda-gpus

On the NVIDA website each CUDA enabled graphics card has a “Compute Capability” listed. For cards with a compute capability of 1.2 or less, only the single precision version of the GPU Module can be utilised. However, benchmarking has indicated that the double precision version is NOT required and that the TUFLOW_iSP exe should be used for all TUFLOW HPC GPU simulations. Extensive GPU hardware benchmarking has been undertaken to assist users who are upgrading hardware for TUFLOW modelling. Over 50 different hardware options have been tested for their speed performance. The results are provided on the Hardware Benchmarking page.

Benefits of HPC

So what does this mean for modellers?

By providing the ability to run models on Graphics Cards, we can achieve significantly shorter model run times, increasing our modelling capabilities to be able to run continuous hydraulic models, with higher cell resolution, across larger extents and more scenarios. Common TUFLOW HPC applications include:

- Monte Carlo design assessments

- Rainfall ensemble design assessments

- High resolution 1D underground / 2D above ground integrated urban drainage

- High resolution floodplain lumped hydrology / hydraulic modelling (either fully 2D or including nested 1D open channels and pipes)

- Whole of catchment direct rainfall

- Flood forecast modelling

- Long-term water resource management modelling

The unconditional stability and higher order accuracy of TUFLOW HPC also lends itself well to highly transient situations, such as dam break assessments, where other solvers would either become unstable, lose accuracy or experience impractical simulation slow-down due to the need to solve at an extremely small timestep.

| Up |

|---|