ARR DATAHUB CANNOT BE ACCESSED: Difference between revisions

Jump to navigation

Jump to search

Content deleted Content added

Ellis Symons (talk | contribs) No edit summary |

Ellis Symons (talk | contribs) No edit summary |

||

| Line 1: | Line 1: | ||

=Purpose= |

|||

This page has been setup in the event that the ARR datahub cannot be accessed by 'Extract ARR2016 for TUFLOW' tool in QGIS, but can still be accessed manually by the user. This situation may occur during times of heavy automated traffic on the website.<br><br> |

|||

The following error appears in the log.txt when the tool cannot access the ARR website.<br> |

|||

'''Failed to get data from ARR website'''<br> |

|||

=Workaround= |

|||

There is a workaround in place for this situation. It requires the user to manually extract data from the ARR datahub, perform some file manipulation, then save the file in the correct location with the correct name. The tool has been setup to first attempt to access the ARR datahub. If failing this, it will then seek to find an existing copy of the extracted data.<br> |

|||

=Steps= |

=Steps= |

||

<ol> |

<ol> |

||

| Line 15: | Line 21: | ||

<li> Copy the contents of the csv to the end of 'ARR_Web_data'<br>[[File:ARR_Web_data_content_addtp.PNG | 250px]] |

<li> Copy the contents of the csv to the end of 'ARR_Web_data'<br>[[File:ARR_Web_data_content_addtp.PNG | 250px]] |

||

<li> Infront of 'EventID' in the newly copied across data, insert the following text '''[STARTPATTERNS]'''<br>[[File:ARR_Web_data_content_addtpHeader.PNG | 550px]] |

<li> Infront of 'EventID' in the newly copied across data, insert the following text '''[STARTPATTERNS]'''<br>[[File:ARR_Web_data_content_addtpHeader.PNG | 550px]] |

||

<li> Save 'ARR_web_data_' |

|||

<li> Run the tool in QGIS again - ''The tool should now first try and access the datahub online. If this fails, it will then look for a pre-existing datahub output with temporal patterns included'' |

|||

</ol> |

|||

Revision as of 16:10, 30 October 2018

Purpose

This page has been setup in the event that the ARR datahub cannot be accessed by 'Extract ARR2016 for TUFLOW' tool in QGIS, but can still be accessed manually by the user. This situation may occur during times of heavy automated traffic on the website.

The following error appears in the log.txt when the tool cannot access the ARR website.

Failed to get data from ARR website

Workaround

There is a workaround in place for this situation. It requires the user to manually extract data from the ARR datahub, perform some file manipulation, then save the file in the correct location with the correct name. The tool has been setup to first attempt to access the ARR datahub. If failing this, it will then seek to find an existing copy of the extracted data.

Steps

- Run 'Extract ARR2016 for TUFLOW' tool in QGIS until error - This will let the tool download the data from BOM as well as perform the pre-calculations such as extract the catchment area and centroid from the input catchments

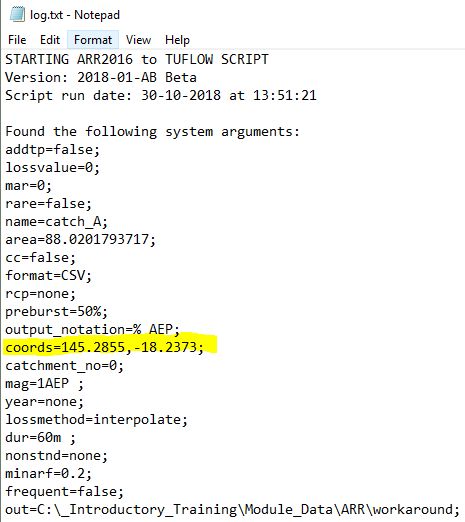

- Open 'log.txt' located in the tool output folder and extract the longitudinal and latitude values

- Using an internet browser, navigate to the ARR datahub

- Input the coordinates of your catchment

- Tick on the 'Select All' box

- Click Submit

- Navigate to the bottom of the page and click Download TXT

- Create a new .txt file called 'ARR_Web_Data_<catchment name>.txt' and save it in the data folder alongside the existing file 'BOM_raw_web_<catchment name>.html - The catchment name should be consistent with the name used in the QGIS tool. It will be the same as the BOM raw output and also be listed in the log.txt after name=

- Copy the text from the 'Download TXT to the newly created 'ARR_web_data' text file

- On the ARR datahub page, navigate to the temporal patterns and click Download (.zip)

- Extract the contents of the zip file

- Open '_increments.csv' in a text editor

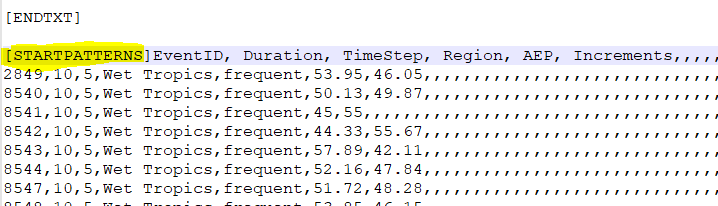

- Copy the contents of the csv to the end of 'ARR_Web_data'

- Infront of 'EventID' in the newly copied across data, insert the following text [STARTPATTERNS]

- Save 'ARR_web_data_'

- Run the tool in QGIS again - The tool should now first try and access the datahub online. If this fails, it will then look for a pre-existing datahub output with temporal patterns included